With the Swipe of a Tongue, CSUN Prof Makes Touchscreen Capabilities Accessible to Those Without Use of Their Arms

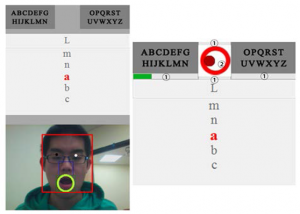

Tongue-able Interfaces: prototyping and evaluating camera-based tongue gesture input system. Smart Health, 11, 16-28.

Photo courtesy of Niu, S., Liu, L., & McCrickard, D. S.

Vehicular accidents, electric shock, injuries in war and birth defects can result in temporary or permanent paralysis of upper limbs, leaving millions of people worldwide with limited use of their hands and unable to interact with computers and other input devices designed for hand-use.

With a simple swipe of a tongue, California State University, Northridge computer science professor Li Liu and his students hope to open the doors to the touchscreen world of smartphones and tablets for those with upper-body mobility challenges.

Prototype of tongue-able user interface.

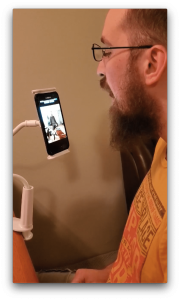

photo courtesy of Garret Richardson

Some assistive technology currently on the market can respond to eye movement, but are limited in their ability to do a number of tasks and lack precision in their responses.

“It was during a CSUN Conference (CSUN’s annual Assistive Technology Conference) a couple of years ago when I really got inspired to look for a more efficient and effective way for people who cannot use their arms to interact with their computers,” Liu said.

By tapping into the capabilities of the cameras embedded in laptop computers, Liu and a collaborator from Virginia Tech, Shuo Niu, developed a program that allows the camera’s imaging processing feature to zero in on the motions of a person’s tongue and translates it into action – whether it’s to move the cursor on the screen or type texts in different software applications on a desktop computer.

As assistive technology transitions onto mobile interfaces, Liu is continuing the development of his tongue-computer interface for mobile platforms. Liu and his recent graduate student Garret Richardson created a system-level service with TensorFlow Lite, an open deep learning framework from Google, and added the service to the Android ecosystem. The service allows Android devices, from smartphones to tablets and laptops, to read where a user’s tongue is pointing to activate apps and capture different tongue gestures.

“I would love to see this get to the point where it is available to anyone who could use it,” Liu said. “At its most basic level, it allows people with upper mobility disabilities to use their smart devices or computers to their fullest potential.”

Each year, Liu takes his students to the CSUN annual Assistive Technology Conference – the world’s largest conference with the purpose of advancing knowledge and the use of the technology to improve the lives of individuals with disabilities.

“The world of assistive technology presents so many opportunities for students to find personal and professional success,” Liu said. “I want them to understand that what they are learning can make a difference in people’s lives.”

Shuo Niu is now an assistant professor at Clark University and Garret Richardson is now a software engineer at L3Harris Technologies. For more information about accessibility research at CSUN, please visit https://www.csun.edu/ecs/a11y

experience

experience